As part of CPO Circles, I convene round tables every month with some of the smartest people I know, to discuss the things that they’re challenged by. We talk through these issues together, sharing experiences and advice - and when that fails, as it occasionally must, we commiserate.

I take notes from every one of these discussions, and put them behind a paywall. Today, I’m happy to share the framework that came out of a discussion with Antoine Fourmont-Fingal that started at one of the roundtables, and continued to develop.

That word - framework - is important. This approach doesn’t tell you what to do in a prescriptive way - there’s an art and a science to that, and it’s highly context-specific. Instead, it gives you an approach to have better discussions about the opportunities that are under consideration, so that the ROI can be estimated and the work prioritised in a collaborative way.

Introduction

It can feel like “AI” turns up not only in every conversation we have at work these days, but sometimes in every sentence. When you hear it that often, it becomes meaningless - people might as well be saying ‘Magic Pixie Fairy Dust.’

So, while there's a lot of hype - and a lot of value - from new approaches, we need to be mindful of what we mean. There are 3 major topics that come up in Product Development:

- Using AI-powered tools to change how we work

- Adding AI-enabled features into our products

- And most importantly, understanding how to talk about the above - both what opportunities there are, and how to prioritise and plan to take advantage of them.

It’s that last point that gets the least attention. A recent chat at one of our CPO Circles Roundtables centred on using the Cynefin framework as the basis for how we could have better discussions, enabling us to work across our management teams to make better decisions, faster.

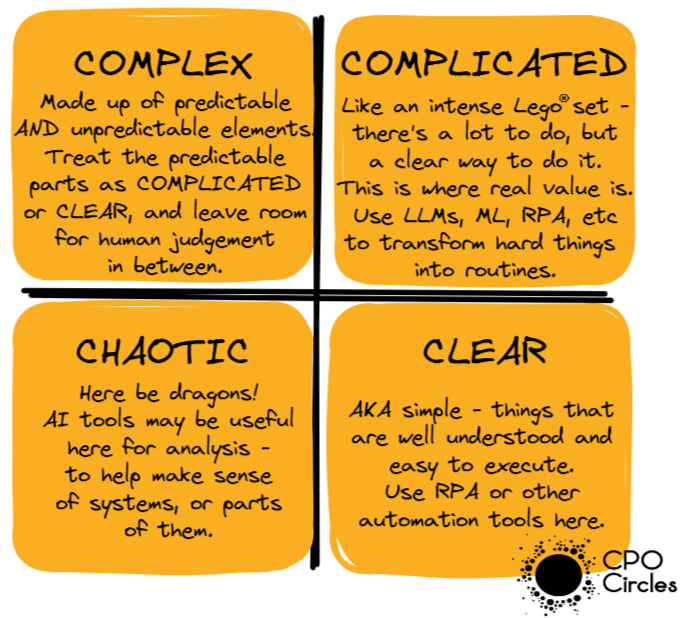

You might have come across Cynefin over the past few years - it’s a Welsh word, pronounced ‘Keh-nevin’, and the Curriculum for Wales defines it as 'the place where we feel we belong, where the people and landscape around us are familiar, and the sights and sounds are reassuringly recognisable.’ Dave Snowden’s been working with it since the turn of the century, and uses it as a way to aid decision-making, classifying things into 4 specific domains:

- Clear

- Complicated

- Complex

- Chaotic

A fifth domain (Disorder) is sometimes included. We’re going to ignore it for the purposes of this approach, as Chaotic covers the use case sufficiently. You can read more about Dave’s work at The Cynefin Company.

In our CPO Circles roundtable, we were inspired by this framework and used it as a rough guide to prioritising product development work. While there are plenty of models for prioritisation out there that can be used - MoSCoW, ICE, RICE, etc - it became clear that the word ‘AI’ escalates the subjective nature of these tools. That is, people would underestimate the effort required to do the work, and overestimate the gains at a factor much higher than normally occurs. We needed a new approach, to ensure that we could have a better discussion not about whether to use AI tools, but to clarify which ideas were most likely to be successful, what the effort and reward might be, and what approach to take.

In the end, it comes down to something annoyingly simple and that pops up again and again: the right tool for the job is the one that helps you have a more informed discussion, faster, so that better decisions can be made at pace. As we’ve tried this with our friends and colleagues, we’ve found that using the Cynefin model as a base does just that:

- It forces people to be informed about how often the feature will be used, and the real value it saves or creates

- It deals with the levels of risk and complexity involved

- It moves the discussion into the realm of tangible business decisions and tradeoffs, and away from Magic Fairy Pixie Dust and ‘Can’t we JUST do X?’

- It helps the Product Development team to better understand why these things are being asked for, and for the Business operations teams to become invested in the development process - encouraging the teams to communicate and partner in seeking the most expedient approaches to achieving genuine business goals.

One more thing: we’ve tested this approach against the idea of automating existing processes, or of creating new processes where automation makes them feasible and viable. There are other use cases for AI-based approaches, but these are where the majority of day-to-day opportunities come up, and where the lowest-hanging fruit still lies in most companies right now.

In Part 1 of this article (below), we’ll talk about placing things into the correct quadrants. In Part 2, we’ll dig deeper into prioritising things within those quadrants.

How to use this approach:

Discovery

First, we need to come to an agreement on how to approach the opportunity that’s being presented. That involves qualifying which quadrant is most applicable. To do this, we ask a series of questions. We’ll use the example of a car dealership to illustrate how to apply it, practically.

Yes, this sounds basic - and there’s a good reason for that. This is the foundation of any decent user story, and the basis of any decent canvas. There are good reasons we keep using it.

Tips:

- Push for concrete, scenario-driven answers, not vague hopes.

- Use real process maps or customer journeys when possible.

- “If it feels abstract, it probably is—push for detail.”

Example:

“A customer visits the dealership’s website at 10 PM with a question about trade-in value. Instead of waiting for office hours, the genAI chatbot answers instantly, collects details, and books an in-person assessment for the next day. Before: email left unanswered until morning. After: lead captured before competitors can.”

If you’re looking to create scale or find efficiencies, it’s critical that the process being addressed is one that is suitable - one that is stable enough that the proposed approach is both viable and feasible.

Tips:

- Distinguish internal process stability and external customer/market shifts.

- Internal stability: Are dealer scripts/processes stable, or is staff always improvising?

- External stability: Are customer needs/market context shifting quickly (e.g., new EV incentives)?

- If changes are frequent, note what triggers them.

Example:

“Internally: The process for handling trade-ins is quite stable—same forms and checks for years. Externally: Customer preferences shift more with season and new car releases, but the core need for fair pricing hasn’t changed.”

This won’t come as a surprise - any strategic decision must be based on both why the thing is being done and how you’ll collectively know it’s been successful. Skipping this risks a lack of alignment and means that the people actively working on the opportunity understand the scope, allowing them to work on suitable approaches and make critical prioritisation decisions.

Tips:

- Probe for cause-and-effect clarity: Is there a direct line between the AI’s output and the business outcome?

- Use clear metrics: “What numbers move, and why?”

- Flag answers like “maybe, probably” as signals of complexity.

Example:

“If the chatbot reduces call wait times by 30%, we can directly measure drop in abandoned support calls. Harder: If sales go up, other campaigns or promotions may play a role, so we need to track those too before attributing change to AI alone.”

This might seem redundant, as it’s not materially different from the previous question. In our experience, it’s always good to go the extra mile and confirm this with key partners to ensure there’s not been any misunderstanding.

Tips:

- Ask each to explain in their words; surface gaps explicitly.

- Run a “repeat the brief” exercise: ask each group for their one-sentence goal.

- Misalignment = complexity or chaos.

- Ideal: Everyone converges on a similar story.

Example:

“CEO: We can save so much on staff! It's just a question of time before AI becomes super intelligent, we have to be in the wave and accept the massive changes - our competitors will do it, and we'll be left behind.”

From the outside, AI tooling looks like magic. When someone asks, ‘Can’t we just do this?”, they’re missing the fact that just is the most dangerous word in product development - it ensures that the reality of how things are developed and delivered is papered-over. For this step, we like to use Liz Keogh’s estimate multiplier for estimating complexity:

5x - Nobody in the world has ever done this before.

4x - Someone in the world did this, but not in our organization (and probably at a competitor).

3x - Someone in our company has done this, or we have access to expertise.

2x - Someone in our team knows how to do this.

1x - We all know how to do this.

Tips:

- Ask for details on actual AI work—implementation, tuning—not just strategy or buzz.

- Ask for actual project names, not just “worked with AI.”

- Check for depth: data labeling, tuning, troubleshooting—not just AI “strategy.”

- If all expertise is external, note the risk of “learn-as-we-go.”

Example:

“We’ve contracted vendors for some basic ML recommender engines, but have never built, fine-tuned, or integrated genAI ourselves. The closest we have is our API team, who maintains the vendor interface.”

Your outputs are only ever as good as the information it’s based on - it’s impossible to make good decisions from bad data. It’s critical to understand what data you’re working with, and how easy or hard it will be to implement it.

Tips:

- Ask for examples: “Show me the data fields.”

- Probe freshness: “When was this last updated?”

- Are there gaps or protected privacy data?

- Data quality is a big topic - the data needs to be relevant, sufficient, and un-biased.

Example:

“Website chat logs and call transcripts are stored for just 90 days; stored in different formats, with no labeling for topic or customer satisfaction. Customer records are in another system, so linking will need new work. Sentiment analysis is going to be tricky, as sarcasm doesn’t get analysed well. And we may have trouble with handling multiple languages.”

This is a critical step, and it’s the key to understanding if the work will enable straight-through processing or if it's enabling humans to make better decisions, faster. This is the fundamental difference between the Complicated and Complex domains - and discovery here also sometimes leads to an understanding that the opportunity is actually classified as Chaotic. We’ll talk more about what to do with this information in the next section.

Some versions of Cynefin add another domain, Disorder. Roughly, this equates to ‘we don’t know if we can know.’ For our purposes, we’ll lump this in with Chaotic as things that are not currently understood well enough to be mapped.

Tips:

- Use diagrams to expose gaps, silos, or fragile links.

- Use diagramming and swimlanes—even whiteboard photos are fine.

- Identify hand-offs between people, teams, and systems.

- Gaps or ambiguities signal complexity.

Example:

“Customer question arrives via website chat: routed to genAI, question answered, details stored in CRM, triggers email to manager. But if the customer calls instead, that’s a separate process—handled by the phone team with no AI, data not linked.”

What domain does it belong in?

These questions give us the context needed to understand which domain the request lives in:

Clear

The process exists, it’s well understood, and very straightforward. The data is easily available in a structured format, and we can automate the rules without any significant issue, there is minimal risk of disagreement between humans over solutions, and there’s minimal risk associated with the approach.

Things in this quadrant are ripe for automation, and can usually be handled by logical processes, such as Robotic Process Automation (RPA).

Complicated

We understand the process, but it takes some doing - the rules are well understood, but it takes some time, effort, and expertise to implement them. That may be because the data is unstructured, not easily available, or the rules have some nuance to them. The risk and/or uncertainty here is non-trivial, but we believe that it’s possible to deal with it. The consequences of any decision made along the process can be mapped, traced back, and understood.

Things in this quadrant are the most obvious opportunities for efficiency - the biggest savings or advantages come from taking something that is cumbersome and making it faster and cheaper. The tooling available - be it RPA, ML, LLM, or something else - means that things which were out of reach in the past are now amazing opportunities. This area is where you'd typically need "experts" and where LLMs (theoretically! Your mileage may vary) can shine by dodging "entrained thinking" and paralysis analysis.

The critical part is to include the guardrails needed with regards to exceptions and unexpected consequences.

Complex

We understand what the process is for - the results should be within a predictable range, but there are challenges:

- How the results are obtained isn’t always easy to document into a clear set of rules,

- Some decisions in the process have a dynamic impact on things further in the process, sometimes in unpredictable ways,

- Or we’re dealing with unstructured or incomplete data, which creates challenges for analysis,

- Or human judgement is needed for other reasons - especially when we’re dealing with new processes or approaches to them.

In these cases, we can often create economies, but not automate end-to-end. The opportunities here come from finding the Complicated and Clear elements of the Complex situation, leaving humans in the loop with sufficient context to make decisions at specific points in the process.

Chaotic

These systems are poorly understood or seem unpredictable by their very nature. They’re often dynamic situations, evolving on a regular basis - a decision made today, given specific inputs, may not be the same one that would be made tomorrow.